Cache memory

Cache memory is a volatile, fast and low space computer memory. It is fast and compatible with the CPU. Such instructions are stored in cache memory which is used most of the time in program execution.

Cache memory increases the speed of process execution because accessing cache memory is faster than accessing ram. Increasing cache size will increase system performance up to some extent.

Cache mapping

The process of bringing contents from main memory to cache memory is known as cache mapping.

There are different methods of cache mapping.

- Direct mapping

- Other is Associative mapping

- Set-associative mapping

Direct mapping

In direct mapping, each main memory block is assigned to a specific line on cache memory.

Here are two terms that should be discussed in detail, “block” and “line”.

What is block?

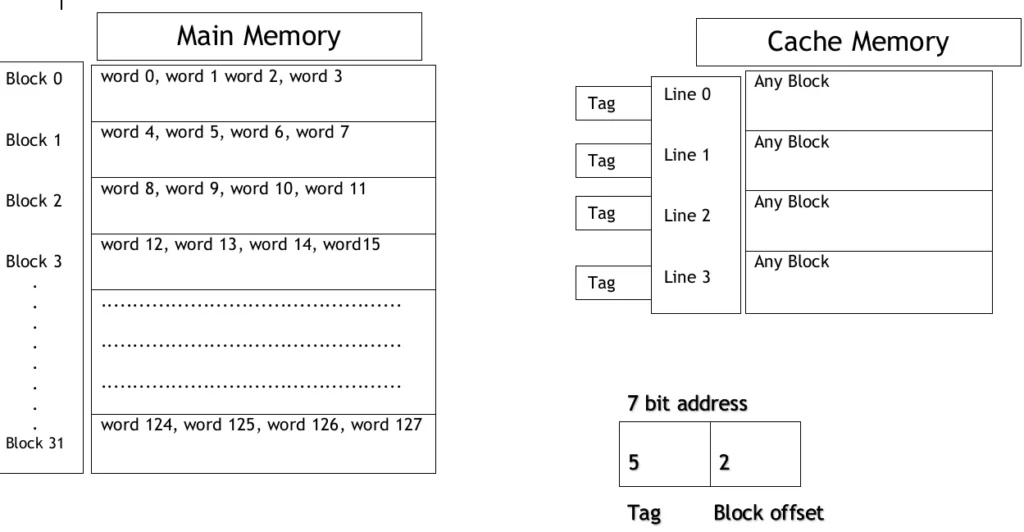

Let blocks are the portions in the main memory for storing data and in each block, the program instructions are stored in form of words. So in other words, in main memory, there are multiple blocks available and in each block, there are multiple words. For this article assume that our main memory can store a total of 128 words. And these words are accommodated in blocks in such a way that each block contains 4 number of words. So total numbers of blocks in the main memory are 128/4 which is 32.

What is line?

The lines are portions in cache memory to store program instruction. For this article, let assume that the cache memory can store 16 words in such a way that each line store 4 words. So the total number of lines in cache memory will be 16/4 which is 4.

In direct mapping, the line size must be equal to block size. And the data will be mapped on cache memory by the given formula K mod n. Where “K” is a block number in the main memory and “n” is a total number of lines in the cache. It means that if we check that block 3 will be stored in which one line, then till will be like this 3 mod 4 because the total number of lines in this example is 4. So the result of 3 mod 4 will be 3/4 the remainder is 3, so block 3 will be stored in line 3.

The physical address of the word in cache memory will be represented by 7 bits which will be divided further in three partitions.

- Tag

- Line number in cache

- Block offset

Advantage of direct mapping

The main advantage of direct mapping is that the CPU checks only one line for the word if it finds the word in cache it is known as a cache hit. And if the word’s block was not present in the cache that is known as a cache miss. So in direct mapping only in one match, the CPU can find whether the word is present in the cache or not. This is the best-case scenario of direct mapping.

Disadvantage of direct mapping in cache

The worst-case might be for direct mapping is that, if CPU search for a word in block 0 and it was not there in the cache so the cache will miss and then the block 0 will be loaded in the cache, then CUP request for word from block 5, again cache miss will occur because and then it will be loaded in cache. After that, if the CPU again requests for a word that is in block 0 so the cache miss will occur because it will not be present in cache memory this situation is known as conflict miss. If there are other empty lines available in the cache but it can not use that lines for loading different block words. So to overcome this situation, there is another method called associative mapping.

Associative mapping

In associative mapping, we will take the same scenario of direct mapping. The main difference is that any block can be stored in any line of cache memory and the accessing method will be different for this. Here the word will be accessed by a 7-bit address and the address have two parts. The First 5 bits will represent the tag and the last 2 bits will represent block offset.

Advantage of associative mapping

The main advantage of associative mapping is that it increases the cache hit rate because there is no restriction of storing blocks in a specific line.

Disadvantage of associative mapping

The disadvantage of this method is that the CPU checks all the lines of cache for a block that may be a bit time-consuming as compared to checking only one line tag as indirect mapping. So there is another method which is a combination of direct and associative mapping called set-associative mapping.

Set-associative mapping

Set-associative mapping is also known as K-way set associative mapping. This is the hybrid of both direct and associative mapping. In this mapping, the cache lines can be divided into different numbers which will be represented by K as.

- 2-way set associative

- 4-way set associatve

- 8-way set associative etc.

So for this mapping first we have to divide the cache lines in specific numbers of sets and that will be a number of lines / K. Let suppose we are using 2-way associative mapping so according to our previous example it will be 4/2 = 2. So we will divide the cache into 2 sets.

After this, the way of finding the position of the block in cache will be by using K mod n. Here K is the number of blocks and n is the total number of sets, so it will provide the set in which we can place our block.

The similarity with direct mapping is that we are restricting mapping of the block to a specific set and the similarity with associative mapping is that we can place the block in any line within the specific set.

The address is represented by 7 bits and it has three parts.

- Tag 4 bits.

- Set number 1 bit.

- Block off set 2 bits.

The set-associative mapping is more efficient than the rest of the two mapping methods.